Detailed Description

template<typename Func>

class seastar::coroutine::parallel_for_each< Func >

class seastar::coroutine::parallel_for_each< Func >

Invoke a function on all elements in a range in parallel and wait for all futures to complete in a coroutine.

parallel_for_each can be used to launch a function concurrently on all elements in a given range and wait for all of them to complete. Waiting is performend by co_await and returns a future.

If one or more of the function invocations resolve to an exception then the one of the exceptions is re-thrown. All of the futures are waited for, even in the case of exceptions.

Example

future<int> sum_of_squares(std::vector<int> v) {

int sum = 0;

sum += x * x;

});

co_return sum;

};

Definition: parallel_for_each.hh:63

Safe for use with lambda coroutines.

- Note

- parallel_for_each() schedules all invocations of

funcon the current shard. If you want to run a function on all shards in parallel, have a look at smp::invoke_on_all() instead.

#include <seastar/coroutine/parallel_for_each.hh>

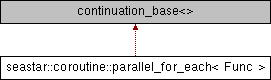

Inheritance diagram for seastar::coroutine::parallel_for_each< Func >:

Public Member Functions | |

|

template<typename Iterator , typename Sentinel , typename Func1 > requires (std::same_as<Sentinel, Iterator> || std::sentinel_for<Sentinel, Iterator>) && std::same_as<future<>, futurize_t<std::invoke_result_t<Func, typename std::iterator_traits<Iterator>::reference>>> | |

| parallel_for_each (Iterator begin, Sentinel end, Func1 &&func) noexcept | |

|

template<std::ranges::range Range, typename Func1 > requires std::invocable<Func, std::ranges::range_reference_t<Range>> | |

| parallel_for_each (Range &&range, Func1 &&func) noexcept | |

| bool | await_ready () const noexcept |

| template<typename T > | |

| void | await_suspend (std::coroutine_handle< T > h) |

| void | await_resume () const |

| virtual void | run_and_dispose () noexcept override |

| virtual task * | waiting_task () noexcept override |

The documentation for this class was generated from the following file:

- seastar/coroutine/parallel_for_each.hh